NASA’s next Mars rover will use AI to be a better science partner

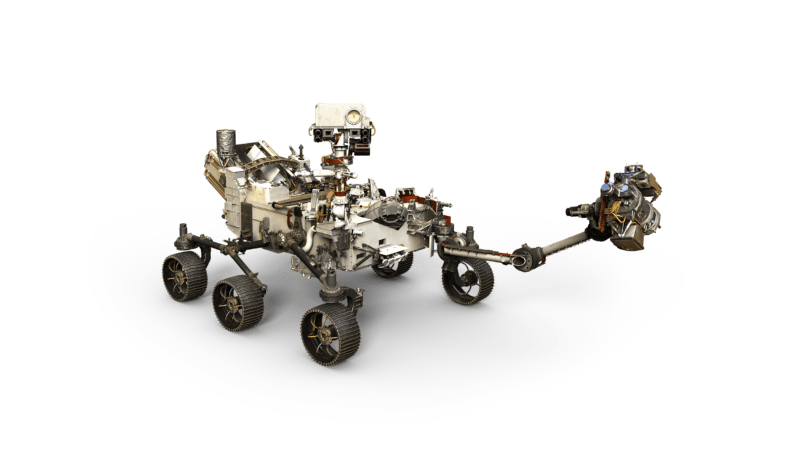

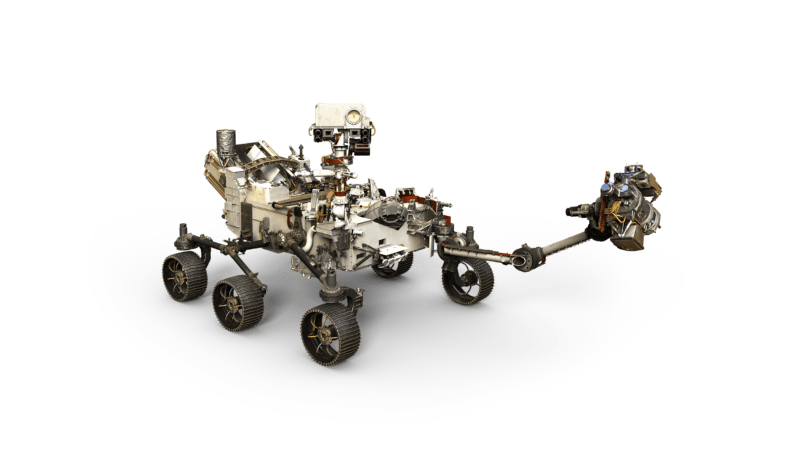

NASA can’t yet put a scientist on Mars. But in its next rover mission to the Red Planet, NASA’s Jet Propulsion Laboratory is hoping to use artificial intelligence to at least put the equivalent of a talented research assistant there. Steve Chien, head of the AI Group at NASA JPL, envisions working with the Mars 2020 Rover “much more like [how] you would interact with a graduate student instead of a rover that you typically have to micromanage.”

The 13-minute delay in communications between Earth and Mars means that the movements and experiments conducted by past and current Martian rovers have had to be meticulously planned. While more recent rovers have had the capability of recognizing hazards and performing some tasks autonomously, they’ve still placed great demands on their support teams.

Chien sees AI’s future role in the human spaceflight program as one in which humans focus on the hard parts, like directing robots in a natural way while the machines operate autonomously and give the humans a high-level summary.

“AI will be almost like a partner with us,” Chien predicted. “It’ll try this, and then we’ll say, ‘No, try something that’s more elongated, because I think that might look better,’ and then it tries that. It understands what elongated means, and it knows a lot of the details, like trying to fly the formations. That’s the next level.

“Then, of course, at the dystopian level it becomes sentient,” Chien joked. But he doesn’t see that happening soon.

Old-school autonomy

NASA has a long history with AI and machine-learning technologies, Chien said. Much of that history has been focused on using machine learning to help interpret extremely large amounts of data. While much of that machine learning involved spacecraft data sent back to Earth for processing, there’s a good reason to put more intelligence directly on the spacecraft: to help manage the volume of communications.

Earth Observing One was an early example of putting intelligence aboard a spacecraft. Launched in November 2000, EO-1 was originally planned to have a one-year mission, part of which was to test how basic AI could handle some scientific tasks onboard. One of the AI systems tested aboard EO-1 was the Autonomous Sciencecraft Experiment (ASE), a set of software that allowed the satellite to make decisions based on data collected by its imaging sensors. ASE included onboard science algorithms that performed image data analysis to detect trigger conditions to make the spacecraft pay more attention to something, such as interesting features discovered or changes relative to previous observations. The software could also detect cloud cover and edit it out of final image packages transmitted home. EO-1’s ASE could also adjust the satellite’s activities based on the science collected in a previous orbit.

With volcano imagery, for example, Chien said, JPL had trained the machine-learning software to recognize volcanic eruptions from spectral and image data. Once the software spotted an eruption, it would then act out pre-programmed policies on how to use that data and schedule follow-up observations. For example, scientists might set the following policy: if the spacecraft spots a thermal emission that is above two megawatts, the spacecraft should keep observing it on the next overflight. The AI software aboard the spacecraft already knows when it’s going to overfly the emission next, so it calculates how much space is required for the observation on the solid-state recorder as well as all the other variables required for the next pass. The software can also push other observations off for an orbit to prioritize emerging science.

2020 and beyond

“That’s a great example of things that we were able to do and that are now being pushed in the future to more complicated missions,” Chien said. “Now we’re looking at putting a similar scheduling system onboard the Mars 2020 rover, which is much more complicated. Since a satellite follows a very predictable orbit, the only variable that an orbiter has to deal with is the science data it collects.

“When you plan to take a picture of this volcano at 10am, you pretty much take a picture of the volcano at 10am, because it’s very easy to predict,” Chien continued. “What’s unpredictable is whether the volcano is erupting or not, so the AI is used to respond to that.” A rover, on the other hand, has to deal with a vast collection of environmental variables that shift moment by moment.

Even for an orbiting satellite, scheduling observations can be very complicated. So AI plays an important role even when a human is making the decisions, said Chien. “Depending on mission complexity and how many constraints you can get into the software, it can be done completely automatically or with the AI increasing the person’s capabilities. The person can fiddle with priorities and see what different schedules come out and explore a larger proportion of the space in order to come up with better plans. For simpler missions, we can just automate that.”

Despite the lessons learned from EO-1, Chien said that spacecraft using AI remain “the exception, not the norm. I can tell you about different space missions that are using AI, but if you were to pick a space mission at random, the chance that it was using AI in any significant fashion is very low. As a practitioner, that’s something we have to increase uptake on. That’s going to be a big change.”